Day 5: System Testing

In the testing hierarchy, the third type of testing is System Testing, which comes after Integration Testing.

System testing, a.k.a. end-to-end (E2E) testing, is testing conducted on a complete software system.

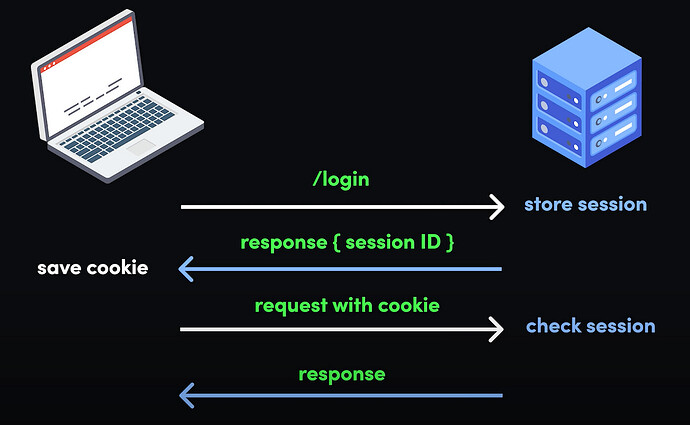

System testing describes testing as at the system level to contrast to testing at the integration or unit level. It focuses on testing the complete workflow or user journey in a system. It can include black-box testing techniques but may also involve white-box testing for backend processes, API integrations, and database validation.

System Testing is similar to demoing a software product. So, if our software product is an e-commerce website, conducting end-to-end testing would typically follow testing the users workflow from login till checkout.

Scenario: Ordering a Product on an E-commerce Platform

Test Objective:

Validate the full functionality of the e-commerce platform, from browsing a product to completing an order.

Steps:

- User Login:

- Open the website.

- Navigate to the login page.

- Enter valid credentials.

- Verify successful login and redirection to the homepage.

- Product Search and Selection:

- Use the search bar to find a specific product (e.g., “running shoes”).

- Filter results by brand, size, color, and price range.

- Select a product from the search results.

- Add to Cart:

- View product details (e.g., description, price, reviews).

- Choose size and quantity.

- Click “Add to Cart” and verify that the product appears in the cart.

- Checkout Process:

- Go to the cart and review the selected items.

- Click “Proceed to Checkout.”

- Enter shipping information (e.g., address, contact number).

- Select a payment method (e.g., credit card, PayPal).

- Verify that discounts, shipping charges, and taxes are calculated correctly.

- Click “Place Order.”

- Payment:

- Enter payment details and confirm the transaction.

- Verify successful payment processing.

- Order Confirmation:

- Validate that an order confirmation page appears with an order ID, estimated delivery date, and summary of the purchase.

- Check that a confirmation email/SMS is sent to the user.

- Admin Verification:

- Log in to the admin portal.

- Verify that the order appears in the system with accurate details.

- Third-Party Integrations:

- Ensure that the payment gateway processes the transaction successfully.

- Validate that the shipping partner receives the correct order details.

- Order Tracking:

- Log in as the customer.

- Go to “My Orders” and track the order status.

- Order Delivery and Feedback:

- Simulate order delivery.

- Provide feedback or rate the product on the platform.