Day 53: Axios vs. React Query, Which One Should You Use in Your React App?

When building a React app, fetching data is a must. But how do you do it efficiently? Two popular options are Axios and React Query. While both can get the job done, they serve different purposes. Let’s break it down for beginners. ![]()

Axios: The Classic Choice ![]()

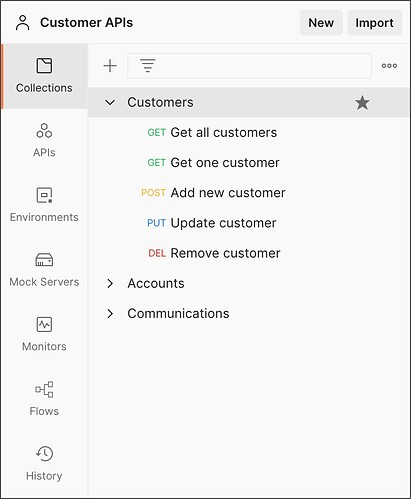

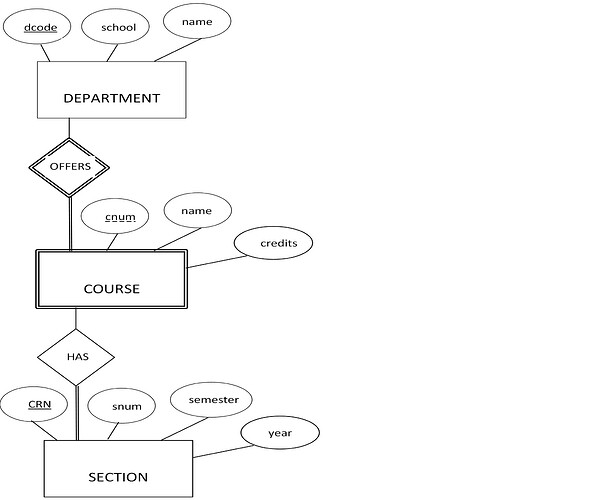

Axios is a promise-based HTTP client that simplifies making requests to APIs. It’s widely used for sending GET, POST, PUT, DELETE requests and handling responses.

![]() Why use Axios?

Why use Axios?

Simple API for making HTTP requests (like axios.get(url))

Supports request/response interception

Allows setting global headers (like authentication tokens)

Works with Node.js as well

![]() What it lacks:

What it lacks:

No built-in caching or data synchronization

You need to manually manage loading, error states, and retries

Doesn’t handle automatic background refetching

Example usage:

import axios from ‘axios’;

const fetchData = async () => {

try {

const response = await axios.get(‘url’);

console.log(response.data);

} catch (error) {

console.error(error);

}

};

React Query: The Smart Choice? ![]()

React Query is a data-fetching and state management library that abstracts away the complexity of handling API requests. It makes working with server-side data more powerful and efficient.

![]() Why use React Query?

Why use React Query?

Built-in caching ![]() (reduces unnecessary API calls)

(reduces unnecessary API calls)

Auto-refetching when data becomes stale

Background updates (users always get fresh data)

Error handling & retries out of the box

Infinite scrolling & pagination support

![]() What it lacks:

What it lacks:

Slightly steeper learning curve for beginners

Adds extra dependencies (though lightweight)

Might be overkill for simple projects

Example usage:

import { useQuery } from ‘react-query’;

import axios from ‘axios’;

const fetchData = async () => {

const { data } = await axios.get(‘LinkedIn’);

return data;

};

const MyComponent = () => {

const { data, isLoading, error } = useQuery(‘myData’, fetchData);

if (isLoading) return

Loading…

;if (error) return

Error: {error.message}

;return

};

Which One Should You Use? ![]()

It depends on your needs! Here’s a quick comparison:

FeatureAxiosReact QuerySimple API Requests:white_check_mark:![]() Global Headers:white_check_mark:

Global Headers:white_check_mark:![]() (but can be set via Axios)Caching:x:

(but can be set via Axios)Caching:x:![]() Auto-Refetching:x:

Auto-Refetching:x:![]() Error HandlingManual:white_check_mark: Built-inBackground Sync:x:

Error HandlingManual:white_check_mark: Built-inBackground Sync:x:![]() Pagination Support:x:

Pagination Support:x:![]()

![]() Use Axios if:

Use Axios if:

You just need to fetch data without advanced state management.

Your app is small, and you don’t need caching or auto-refetching.

You want full control over API requests and responses.

![]() Use React Query if:

Use React Query if:

You want automatic caching, retries, and background refetching.

Your app relies on real-time or frequently updated data.

You need built-in pagination or infinite scrolling.

And as always, happy coding!