Hello,

we have a collection of 1.3 million documents.

Documents have a flat structure, with no arrays.

Aggregation pipeline has 2 stages - $match by stage_id, uq_value and timestamp, and group by stage_id and uq_value, accumulating last element of the group with $last: “$$ROOT”.

stage_id and uq_value are strings, timestamp is Date field.

There is also compound index having stage_id ASC, uq_value ASC and timestamp ASC.

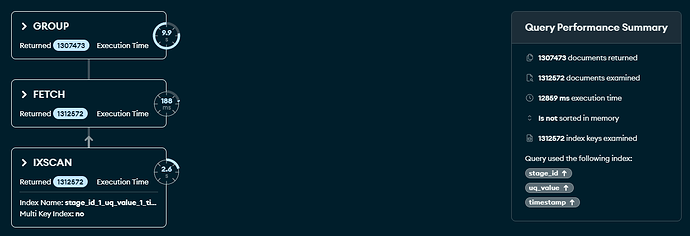

According to Explain Plan, query takes ~ 12 secs.

Here’s the Explain Plan output:

Mongo database should have enough memory for the covered query, memory is on 75%, so I do not get why it doesn’t happen and how to improve this query to run faster, preferably bellow 1 sec. I assume it’s possible.

Here is query example:

[

{

$match: {

stage_id: {

$in: [

"id_1",

"id_2",

//and so on, 30 values here

]

},

timestamp: {

$gte: ISODate("date_from"),

$lte: ISODate("date_to")

}

}

},

{

$group: {

_id: {stage_id: "$stage_id", uq_value: "$uq_value"},

last_event: {

$last: "$$ROOT"

}

}

}

]

uq_value is missing here in $match, because all values are included. If there is filter by that field, it will appear in $match after stage_id condition.

Can anyone help with this?

Thanks in advance!